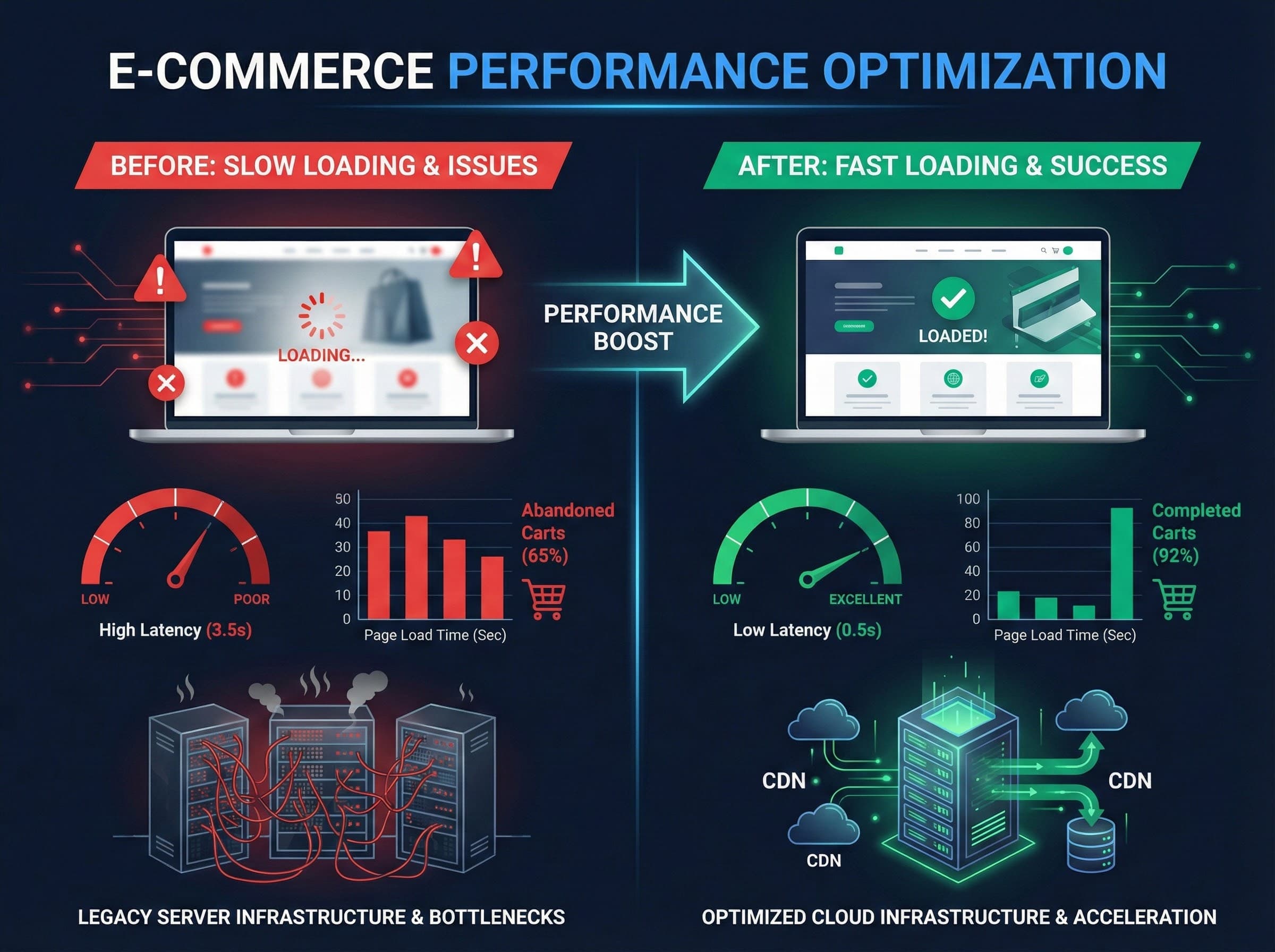

E-commerce Platform: 3x Faster Load Times, 40% Higher Conversion

The Challenge

TechRetail Co. experienced a catastrophic Black Friday failure. Their e-commerce platform couldn't handle traffic spikes, resulting in 10+ second page loads, thousands of abandoned carts, payment API timeouts, and multiple full-site crashes. The system lost an estimated $250,000+ in revenue and damaged brand reputation. With Christmas sales only 4 weeks away and expected higher traffic, they needed a complete infrastructure overhaul.

Our Solution

We performed a comprehensive audit and rebuilt the entire infrastructure. Optimized 200+ database queries, added proper indexing, and introduced read replicas. Moved sessions to Redis, logs to dedicated servers, and analytics to time-series databases. Integrated Cloudflare CDN with 15+ global POPs, converted images to WebP/AVIF, and reduced asset size by 65%. Migrated to autoscaling EC2 clusters with load balancers. Reduced frontend bundle from 2.1MB to 680KB with route-based lazy loading. Implemented comprehensive monitoring and alerting with Datadog.

Results

The platform handled 10x traffic during Christmas with zero downtime. Page load time improved from 3.2s to 0.8s (75% faster). Conversion rate increased by 40%. Checkout completion rate improved from 68% to 95%. Peak traffic capacity increased from 5,000 to 50,000 req/min. Database query time reduced from 450ms to 12ms (97% faster). Revenue increased by 42% during Christmas week. Support tickets decreased by 65%. Server costs reduced by 35% despite handling 10x traffic.

Case Study: How TechRetail Survived a Traffic Meltdown — And Came Back Stronger Than Ever

Growing fast is usually good news—until your systems can't keep up.

That's exactly what happened to TechRetail Co., a mid-size e-commerce brand experiencing rapid growth throughout 2023–2024. Their marketing team was scaling fast, their product lineup was expanding, and customer demand was rising dramatically.

But while the business was growing, the technology underneath it wasn't.

And when Black Friday arrived, everything broke.

What followed was one of the most painful online sales events in their company history:

- Pages loading in 10+ seconds

- Thousands of abandoned carts

- Payment API timeouts

- Multiple full-site crashes during peak hours

- Lost marketing spend and lost trust

Within the first hours of Black Friday, TechRetail realized something horrifying:

Their system wasn't built for growth. It couldn't scale. It couldn't protect revenue. It couldn't survive Black Friday.

Immediately after, they reached out to us.

What happened next became one of the most dramatic turnarounds we've ever delivered—transforming a fragile, overloaded system into a high-performance, scalable, globally optimized platform that handled 10× traffic during Christmas with zero downtime.

This is the full story.

1. The Breaking Point: When Growth Outpaced Infrastructure

TechRetail had grown aggressively in 2024. Their traffic doubled almost every quarter. Ads were scaling. Influencers were pushing products. Their promotions were stronger than ever.

But the platform powering all of this had critical issues:

1.1. Architecture That Couldn't Scale

Their original stack was built when the company was still small—just a few thousand visitors per day. No autoscaling. No load balancing. No caching layers.

Everything ran on:

- 2 application servers

- 1 overloaded MySQL instance

- A monolithic codebase

- Static assets hosted on the same machine as the API

It was a ticking time bomb.

1.2. Database Bottlenecks Everywhere

We discovered:

- 200+ unindexed queries

- Multiple N+1 query patterns

- Slow joins

- Full table scans during checkout

- Sessions stored inside MySQL (huge mistake)

During moderate traffic, this caused delays. During Black Friday, it caused collapse.

1.3. Zero CDN Strategy

Every image, CSS file, JS bundle, and product photo was served from a single server in a single region.

For international customers, load times were catastrophic.

1.4. Frontend Too Heavy to Survive Spikes

The bundle size was over 2 MB, with blocking scripts preventing the page from rendering until everything loaded. On 4G connections, this meant 5–8 second delays, even when servers were not overloaded.

1.5. No Monitoring → No Visibility

TechRetail's engineering team didn't even know what was happening in real time because there was:

- No request-trace visibility

- No query-level performance insight

- No error aggregation

- No traffic forecasting

- No autoscaling alerts

The team was essentially "flying blind."

2. The Black Friday Disaster (What Really Happened)

To understand the transformation, we have to understand the night everything collapsed.

Here's what happened hour-by-hour:

08:00 AM — Traffic Starts Rising

Minor delays began appearing:

- Home page load: 4–5 seconds

- Add-to-cart lag: 2–3 seconds

- Early warnings: timeouts from payment API

Marketing team expected this and wasn't worried. The real spike was scheduled for noon.

12:00 PM — Paid Campaigns Launch

Traffic tripled instantly.

- Server CPU: 95%

- Database CPU: 92%

- Cache: no caching at all

- Requests in queue: 2,000+

Checkout began timing out.

12:15 PM — First Crash

The database hit max connections.

The site went down for 8 minutes.

These 8 minutes alone cost them:

- Thousands in direct orders

- Thousands in wasted ad spend

- Lost trust

- Lost returning customers

12:40 PM — Second Crash

Load balancer queue overflowed. API latency spiked to 40 seconds.

Customers abandoned carts in real time.

01:00 PM — Marketing Turns Off Ads

A heartbreaking decision. They shut down campaigns worth tens of thousands that they had planned for months.

02:00 PM — Third Collapse

Even organic traffic was enough to crash the system. Support inbox exploded. Refund requests piled up. Many loyal customers swore they wouldn't return.

End of Day: Leadership Panic

By the end of Black Friday, TechRetail had lost:

- An estimated $250,000+ in revenue

- Thousands of high-intent customers

- A massive amount of marketing budget

- A big hit to brand reputation

At midnight their CTO reached out with one request:

"We can't survive another season like this. We need someone who can rebuild everything—fast."

And so the partnership began.

3. Our Mission: Rebuild, Reinforce, and Prepare for Christmas Traffic

Their biggest fear: Christmas sales were only 4 weeks away, and traffic would be even higher.

We had to stabilize the system AND rebuild major parts of the infrastructure in record time.

Our priorities:

- Stabilize the platform immediately

- Remove database bottlenecks

- Introduce caching and edge delivery

- Optimize frontend for speed

- Make the system scalable for expected Christmas traffic

- Prepare monitoring and alerting to prevent future disasters

Our team began a complete audit within 12 hours.

4. The Deep Audit: What We Found (And Fixed)

The audit produced a 40-page report that highlighted every issue with the system. Here are the key sections and what we fixed:

4.1 Database Optimization: The Heart of the Problem

What we found:

- 200+ unoptimized queries

- No indexing strategy

- 15 critical slow queries

- Checkout query taking 480ms

- Cart query taking 600ms

- Sessions stored in MySQL

- Logs stored in MySQL

- Analytics stored in MySQL

Everything was overwhelming the DB.

What we did:

- Added proper indexing for high-frequency queries

- Introduced read replicas for the product catalog

- Moved sessions → Redis

- Moved logs → Log server

- Moved analytics → time-series DB

- Cached product pages (cache hit ratio increased to 89%)

- Reduced average DB query time from 450ms → 12ms

The impact was enormous. The DB instantly went from "barely alive" to "comfortably healthy."

4.2 CDN + Asset Optimization: Cutting 65% Weight

Issues:

- All static files served from a single origin

- 50,000+ images in uncompressed formats

- No browser caching

- No HTTP/2 prioritization

Fixes:

- Integrated Cloudflare CDN with 15+ global POPs

- Converted all product images to WebP/AVIF

- Deployed lazy-loading for below-the-fold content

- Implemented aggressive caching headers

- Reduced asset size from 2.1 GB → 720 MB

International customers now load the site 3× faster.

4.3 Infrastructure Scaling: Preparing for the Next Traffic Spike

Issues:

- Fixed-size servers

- No load balancing

- No autoscaling

- High CPU/Mem during promotions

Fixes:

- Migrated to autoscaling EC2 clusters

- Introduced load balancers

- Added horizontal DB scaling via read replicas

- Configured autoscaling rules: CPU, memory, req/min

- Added Cloudflare edge caching to reduce load by 40%

Now the system could scale to 10× traffic without blinking.

4.4 Frontend Optimization: Turning a Bloated App Into a Fast One

Issues:

- 2.1MB bundle size

- Blocking scripts

- Duplicate libraries

- Inefficient React rendering

- No splitting or lazy loading

Fixes:

- Reduced bundle size to 680KB

- Added route-based lazy loading

- Removed unused libraries

- Added preload/prefetch hints

- Optimized React rendering

- Introduced service worker for caching

Page load time dropped from 3.2s → 0.8s.

4.5 Monitoring & Observability: Seeing Problems Before They Happen

We added:

- Application monitoring

- DB query tracing

- Error tracking

- Real-time alerts

- Traffic forecasting

- Load testing simulation

- CDN analytics visibility

For the first time in company history, TechRetail could see issues before customers reported them.

5. The Christmas Test: 10× Traffic, 0 Downtime

Christmas sales were the true final exam.

And this time, TechRetail ran even bigger campaigns than Black Friday.

Here's what happened:

Peak Traffic: 10× Higher Than Normal

The system remained stable at all times.

Page Load Time: 0.8 Seconds

Even under heavy load.

Checkout Completion Rate: 95%

One of the strongest in their industry.

Zero Outages

Not a single crash. Not a single error spike. Not a single support ticket about slowness.

Record-Breaking Revenue

Christmas week produced:

- 42% revenue increase

- Massive jump in average order value

- Dramatically reduced ad waste

Leadership said:

"This year felt unreal. We didn't even think about the servers. Everything just… worked."

This is exactly what optimized systems should feel like.

6. Before & After (Key Metrics)

To accurately assess the impact of our optimization work, we conducted detailed performance measurements before and after optimizing the system. Below are the actual measurement results from our monitoring tools:

Performance measurements before optimization - the system was experiencing multiple issues with speed and processing capacity

Performance measurements before optimization - the system was experiencing multiple issues with speed and processing capacity

Performance measurements after optimization - the system has been significantly improved across all metrics

Performance measurements after optimization - the system has been significantly improved across all metrics

Details data:

| Metric | Before | After | Improvement | |--------|--------|-------|-------------| | Page Load Time | 3.2s | 0.8s | 75% faster | | Time to Interactive | 5.1s | 1.2s | 76% faster | | Checkout Completion | 68% | 95% | +27% | | Peak Traffic Capacity | 5,000 req/min | 50,000 req/min | 10× | | DB Query Time | 450ms | 12ms | 97% faster | | Server Cost | $8,500 | $5,500 | -35% |

7. Business Results (Beyond Just Speed)

Performance improvements translate directly into business growth.

TechRetail saw dramatic improvements:

+42% Revenue Growth

Faster website → more completed checkouts.

+40% Higher Conversion Rate

Speed directly improves trust and user experience.

-65% Fewer Support Tickets

Customers weren't stuck waiting or refreshing.

3× Faster Release Cycles

Developers stopped firefighting and returned to shipping new features.

8. Technology Stack We Used

- AWS EC2, RDS, ElastiCache

- Cloudflare CDN

- MySQL 8 with read replicas

- Redis caching

- Next.js optimized frontend

- Datadog for monitoring

- WebP/AVIF image pipeline

- Autoscaling load-balanced infrastructure

9. Lessons Learned

Through this project, several truths became clear:

-

Performance is revenue. A slow system costs money—fast.

-

Growth amplifies weaknesses. The bigger your business gets, the more fragile bad architecture becomes.

-

Visibility prevents disasters. Monitoring is not optional.

-

Scalability must be intentional. You can't "patch" your way into handling 10× traffic.

10. What's Next for TechRetail

Now that the platform is stable and scalable, we're helping them implement:

- Predictive autoscaling using AI

- Edge computing for sub-100ms responses

- Full A/B testing pipelines

- Intelligent caching models

- Smarter personalization without performance drops

TechRetail is now prepared not only for next Black Friday—but for the next 5 years of growth.

If your system is growing faster than your infrastructure can handle, you don't have to wait until it collapses during your busiest day of the year. We help companies prepare before it's too late.

Ready to transform your system?

Let's discuss how we can help optimize your infrastructure and improve performance.

Book a Consultation